Stop Tuning Thresholds. Start Learning Patterns with TinyML

1. The Problem Appears After Deployment

Consider an embedded device that monitors vibration from a small electric motor. The system uses an accelerometer and a fixed threshold to detect abnormal behavior. During development, vibration data is collected in the lab, thresholds are tuned, and the system behaves as expected. Normal operation stays below the limit, while known fault conditions clearly exceed it.

Once deployed, the situation changes. Some devices begin to trigger false alarms during normal operation, while others fail to detect real faults. The firmware is identical across devices, and the hardware revision has not changed. Differences in mounting, background noise, and operating conditions slowly alter the vibration patterns. As the motors age, the signal drifts even further.

From a software perspective, nothing is obviously wrong. The logic is simple, deterministic, and well tested. Yet the behavior is inconsistent and difficult to reproduce. This is a common situation in embedded systems that rely on sensor data. The failure does not come from incorrect code, but from the limits of the decision logic itself.

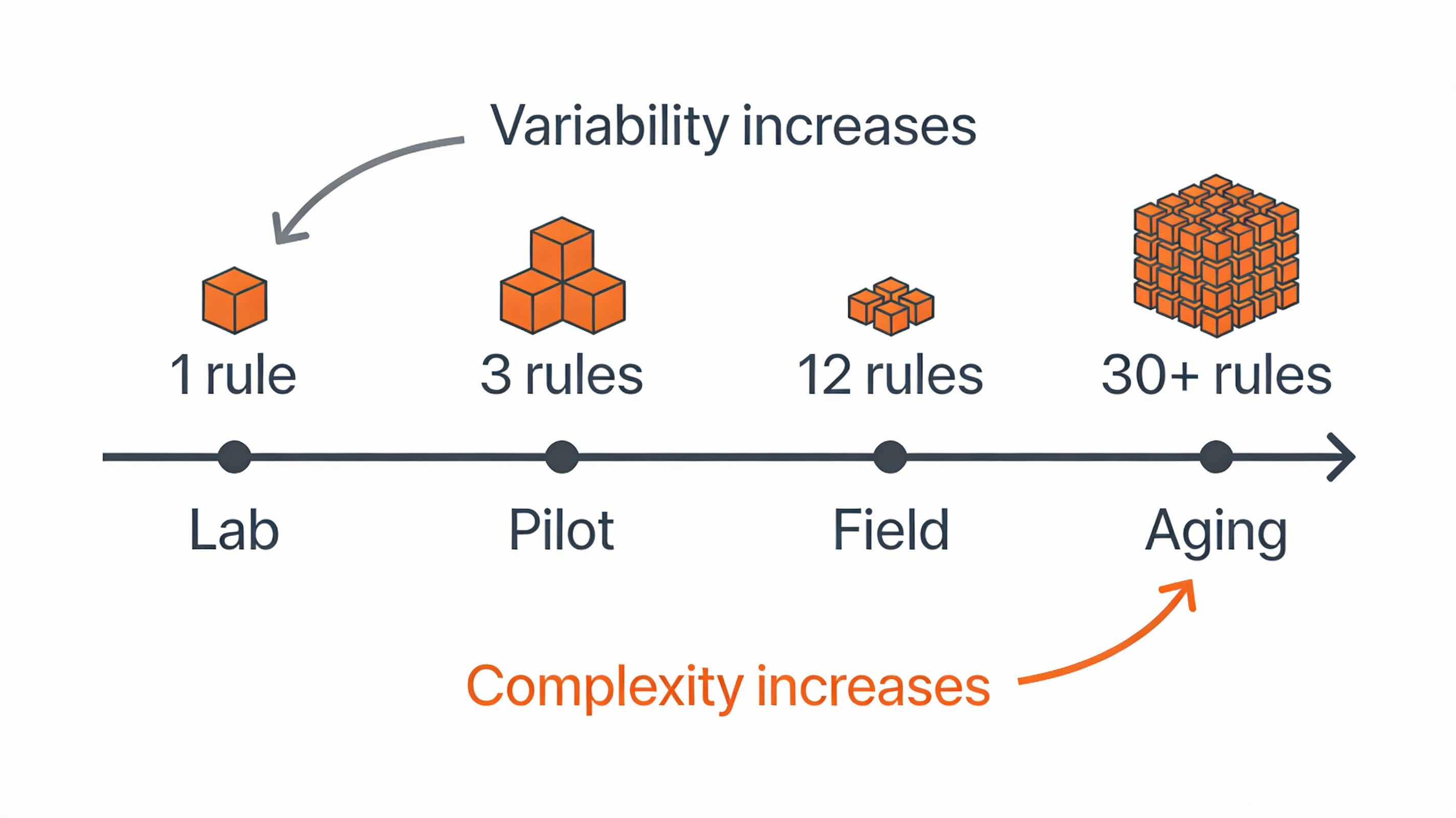

2. Why Adding More Rules Makes Things Worse

The first reaction to this problem is to refine the existing logic. Thresholds are adjusted to reduce false alarms. Extra conditions are added to distinguish between operating modes. Filters are introduced to smooth the signal and ignore short spikes. Each change improves behavior in one scenario while making it worse in another.

In the vibration monitoring system, the signal is influenced by factors that are difficult to control. Motors are mounted differently, surrounding structures resonate at different frequencies, and vibration characteristics evolve over time. Fixed thresholds that work for one device fail for another. Over time, the logic grows into a collection of special cases that is hard to reason about and even harder to validate.

Debugging becomes frustrating because failures depend on real-world conditions rather than clear software faults. The code behaves exactly as written, yet the system still produces incorrect results. At this point, adding more rules increases complexity without addressing the root of the problem.

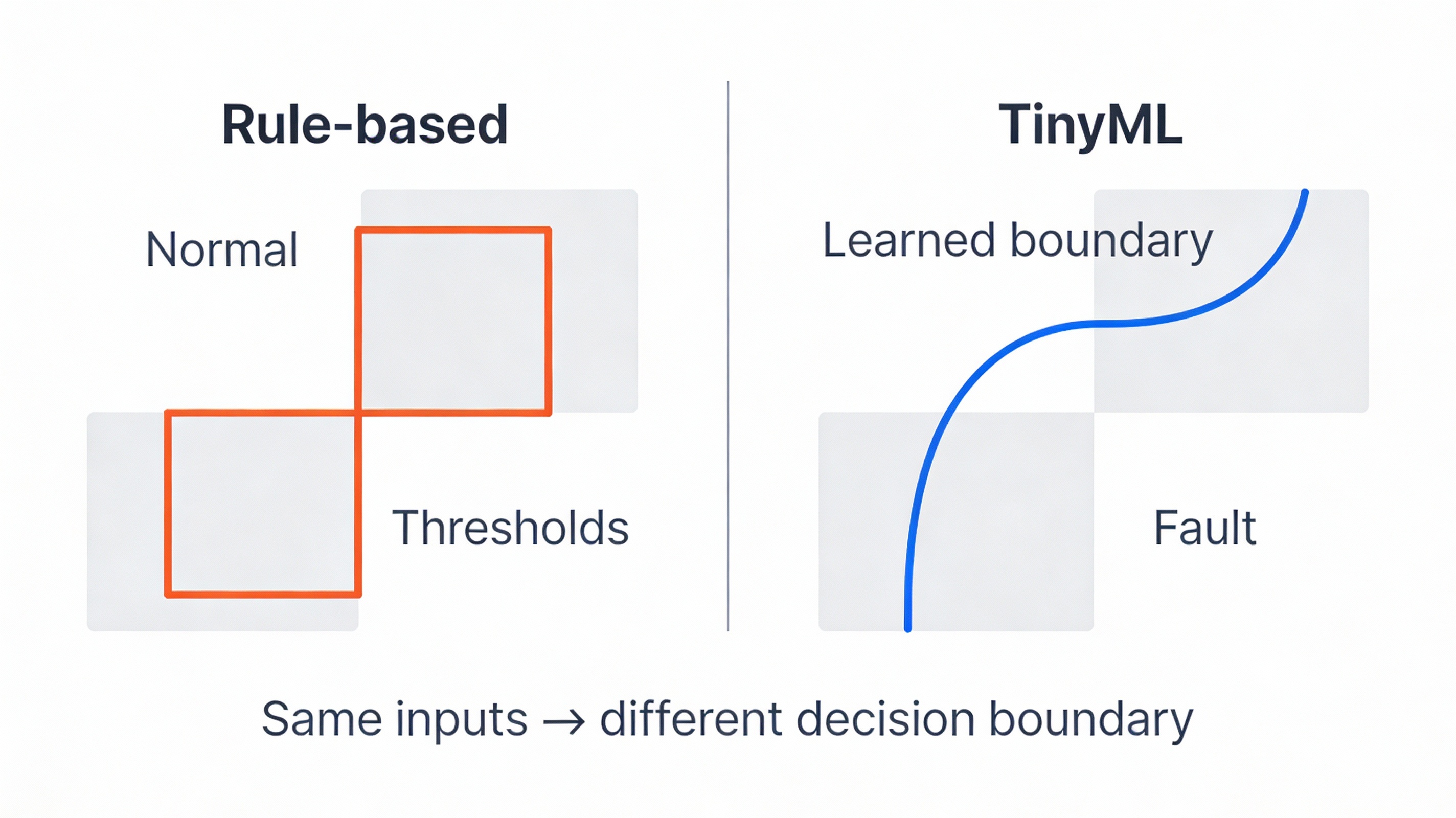

3. TinyML as a Different Way to Express the Decision

TinyML addresses this type of problem by changing how decisions are expressed. Instead of defining fault conditions using hand-tuned thresholds and conditional logic, a model is trained using vibration data collected from both normal and faulty motors under a range of conditions. The model learns patterns in the signal that are difficult to describe explicitly but remain consistent across devices and environments.

From an embedded perspective, this is not about adding intelligence in a general sense. It is about replacing fragile decision logic with a compact representation of real signal behavior. The goal is not perfect accuracy, but more stable and repeatable behavior once the system is deployed.

In the vibration monitoring example, this approach does not eliminate noise or variation, but it tolerates them better than fixed thresholds. Devices that previously behaved inconsistently begin to produce similar results under comparable operating conditions.

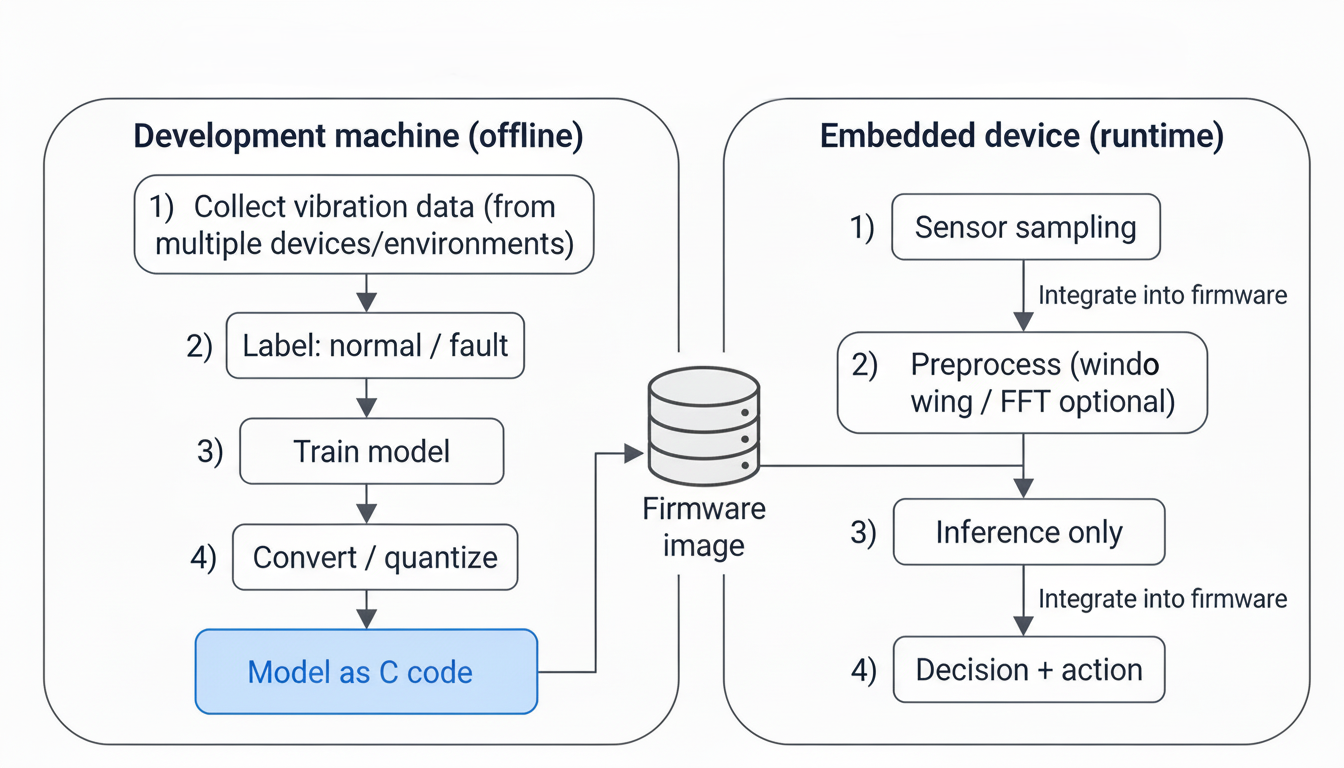

4. How TinyML Is Built and Deployed

The development workflow for TinyML fits naturally into existing embedded practices. A useful way to think about it is to compare it to cross-compiling. When developing embedded software, source code is written and compiled on a development machine, then executed on a constrained target. The embedded device does not perform compilation; it simply runs the final binary.

TinyML follows the same pattern. Vibration data is collected from multiple devices and environments and used to train a model offline on a development system. Once training is complete, the model is converted into a compact representation and integrated into the firmware. On the embedded device, only inference is performed.

At runtime, the device treats the model like any other prebuilt component. Incoming vibration samples are fed into the model, a fixed sequence of operations is executed, and an output is produced. The device does not know how the model was trained, just as it does not know how source code was compiled. This separation keeps runtime behavior simple and predictable.

5. What Changes for the Embedded Developer

Adopting TinyML changes how some design decisions are made. In the vibration monitoring system, effort shifts away from tuning thresholds and toward collecting representative data. Memory planning becomes more important, as the model and its runtime buffers must fit within limited flash and RAM.

Decision logic also changes. Instead of comparing values against fixed limits, the system interprets a model output that represents confidence or likelihood. Application code must decide how to act on this output, often combining it with timing or state information to avoid unstable behavior.

Debugging feels different as well. Instead of tracing complex conditional logic, attention shifts to the quality of input data and the consistency of model behavior across conditions. Timing, power consumption, and determinism remain just as important as in traditional embedded designs.

6. When TinyML Is Worth Using

TinyML is not always the right solution. In the vibration example, a simple threshold may be sufficient if operating conditions are tightly controlled and variation is minimal. TinyML becomes valuable when behavior varies across devices, environments, or time, and when maintaining rule-based logic becomes increasingly difficult.

Problems involving continuous sensor data, small fixed-size inputs, and tolerance for approximate results are good candidates. At the same time, TinyML introduces costs in memory usage, execution time, and development workflow. These trade-offs must be evaluated carefully for each system.