Linux Swapping Demystified: Why It Happens and When to Turn It Off

1.Introduction:

In the early days of computing, programs often outgrew the limited memory available on machines. To cope with this, programmers had to manually split their code into chunks called overlays. The system would load one overlay into memory, run it, then swap it out for the next. This was tedious, error-prone, and placed a heavy burden on developers.

Eventually, researchers came up with a smarter approach: virtual memory (Fotheringham, 1961). With virtual memory, the operating system itself decides which parts of a program stay in RAM and which are temporarily stored on disk. This innovation freed developers from the hassle of overlays and opened the door to running much larger programs on limited hardware.

2. What is Swapping?

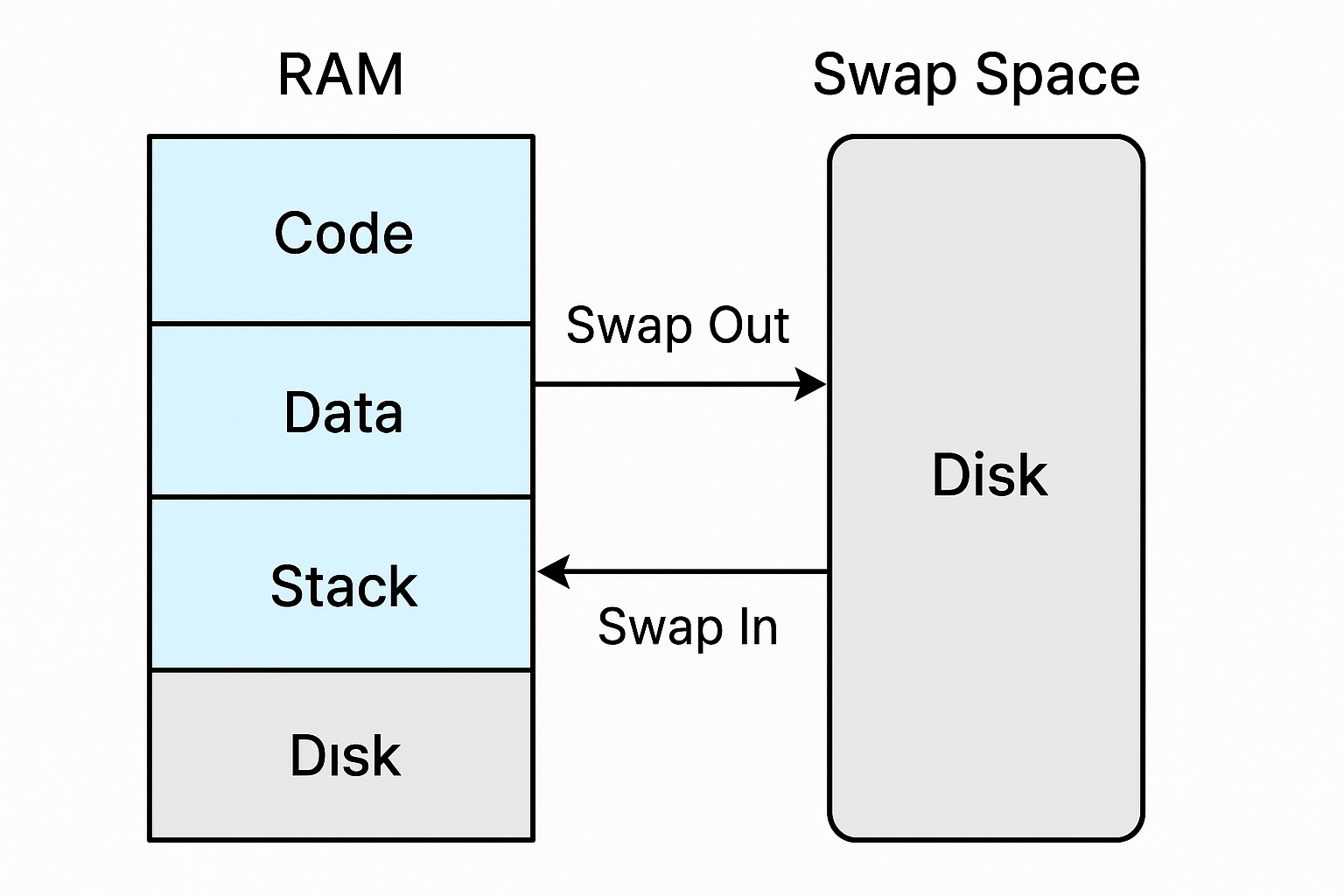

Virtual memory makes it possible for programs to be larger than the machine’s physical memory. The operating system ensures that the active parts of a program, such as its code, data, and stack, remain in main memory, while inactive parts are stored temporarily in swap space on disk.

When the working set of a process grows beyond the available physical RAM, the system begins swapping: moving some memory pages out to disk to free space and pulling them back in when needed. For instance, a 512 MB program can still run on a machine with only 256 MB of RAM, provided the OS constantly shuffles which 256 MB are kept in memory at any given moment.

This mechanism becomes especially powerful in multiprogramming systems. While one program is paused, waiting for its swapped-out pages to be read back from disk, the CPU can be assigned to another program. In this way, the system makes efficient use of resources and maintains good overall utilization.

Swapping works by dividing memory into fixed-size blocks called pages. The operating system tracks which pages are currently in RAM and which have been moved to swap space. When RAM is full, the kernel selects pages that haven’t been used recently and writes them out to disk, freeing their slots in physical memory. If a process later accesses one of those swapped-out pages, a page fault occurs, and the OS pauses the process until it can load the missing page back into RAM. This gives the illusion that a computer has more memory than it physically does, but it comes with a trade-off in speed.

Figure 1: Page swapping shifts memory pages between RAM and disk as needed

3.Proving Swapping in Practice:

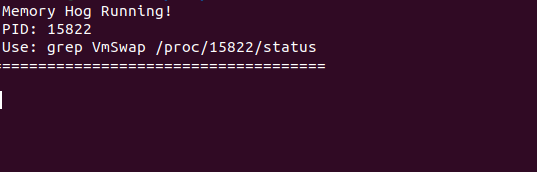

Understanding swapping in theory is one thing, but it becomes much clearer when we can see it happening on a real system. Linux exposes detailed information about memory usage through the /proc filesystem, and one of the easiest ways to confirm whether a process is being swapped is by checking the file /proc/<PID>/status. Inside this file, the field VmSwap shows how much of that process’s memory has been pushed to swap space.

To test this, we first run a simple C program that allocates a large block of memory and then sleeps:

#include <stdio.h>

#include <stdlib.h>

#include <unistd.h>

int main()

{

size_t size = 1024L * 1024L * 1024L; // 1 GB

char *p = malloc(size);

if (!p)

{

perror("malloc failed");

return 1;

}

/** Touch every page to ensure allocation */ *

for (size_t i = 0; i < size; i += 4096)

{

p[i] = 1;

}

printf("=====================================\n");

printf(" Memory Hog Running! \n");

printf(" PID: %d\n", getpid());

printf(" Use: grep VmSwap /proc/%d/status\n", getpid());

printf("=====================================\n\n");

while (1)

{

sleep(10);

}

return 0;

} On startup, if we inspect /proc/<PID>/status, we will usually see VmSwap: 0 kB

To see non-zero swap, we will n create memory pressure using stress-ng so the kernel decides to evict some pages:

# consume 90% of memory for 60 seconds

stress-ng --vm 2 --vm-bytes 90% --timeout 60s while the stress is running we can see that the swap is happening for our process:

This number confirms that roughly 69 MB of our process’s memory has been swapped out to disk.

4.Disabling Swapping with Syscalls:

Now that we’ve seen how swapping works and how to measure it, the next question is: can we stop it for a specific process? The answer is yes.

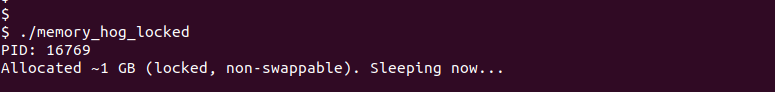

The most common approach in Linux is the mlockall system call. When a process calls mlockall(MCL_CURRENT | MCL_FUTURE), it tells the kernel to keep all current pages of the process in RAM and to also lock any future allocations. In practice, this means the operating system is not allowed to swap out any of the program’s memory, ensuring that all of its data stays resident in physical memory.

Example:

#include <stdio.h>

#include <stdlib.h>

#include <unistd.h>

#include <sys/mman.h>

int main()

{

size_t size = 1024L * 1024L * 1024L; // 1 GB

/* Lock all current and future memory */

if (mlockall(MCL_CURRENT | MCL_FUTURE) != 0)

{

perror("mlockall failed");

return 1;

}

char *p = malloc(size);

if (!p) {

perror("malloc failed");

return 1;

}

for (size_t i = 0; i < size; i += 4096)

{

p[i] = 1;

}

printf("PID: %d\n", getpid());

printf("Allocated ~1 GB (locked, non-swappable). Sleeping now...\n");

while (1)

{

sleep(10);

}

return 0;

} even under heavy memory pressure. This proves that the syscall is working and the kernel is respecting the memory lock.

It’s important to note that disabling swap for a process is not free of consequences. If you lock too much memory and your system runs out of RAM, the Out-Of-Memory (OOM) killer may terminate processes to free up space. This is why memory locking should be used carefully, usually only for workloads where latency is critical and the working set size is well understood.

Remark: In addition to mlockall, Linux also provides the mlock syscall, which allows you to lock only specific memory regions or pages instead of the entire process. This is useful when you only need to protect small, sensitive areas of memory while leaving the rest swappable.

5.When Should We Disable Swapping?

The performance gap between RAM and disk is huge. Accessing RAM takes nanoseconds, while fetching from swap space may take microseconds or even milliseconds. A few page faults might go unnoticed, but heavy swapping can cause noticeable pauses, higher latency, or in extreme cases, thrashing, where the system spends more time shuffling data between RAM and disk than actually running applications. Not all memory is equal in this process: file-backed pages, such as code or cached files, can be dropped and reloaded later, while anonymous pages from a program’s heap or stack must be written to swap space, making them more costly to evict.

In embedded systems, swapping is often unacceptable. Many embedded devices are designed to handle deterministic tasks, where operations must be completed within strict time limits. A controller that regulates engine timing, or an industrial safety system cannot tolerate unpredictable delays caused by waiting for swapped-out memory pages. In such environments, reliability is more important than maximizing memory usage, so these systems are typically built without swap space at all, or with swap explicitly disabled. By forcing everything to stay in RAM, developers can guarantee consistent response times and ensure that critical tasks always meet their deadlines.

6.Conclusion:

Swapping is a powerful mechanism that allows modern systems to run programs larger than the available physical memory, but it comes with serious performance costs. By proving swapping in practice and then disabling it with tools like mlockall, we can ensure that critical processes remain fully in RAM. In general-purpose systems, swap is a safety net, but in environments where deterministic response times are essential disabling swap is often the only safe option.