# What is Hardware Thread known by Hyperthreading? And How it differs from Software Thread?

What is Hardware Thread known by Hyperthreading? And How it differs from Software Thread?

Introduction

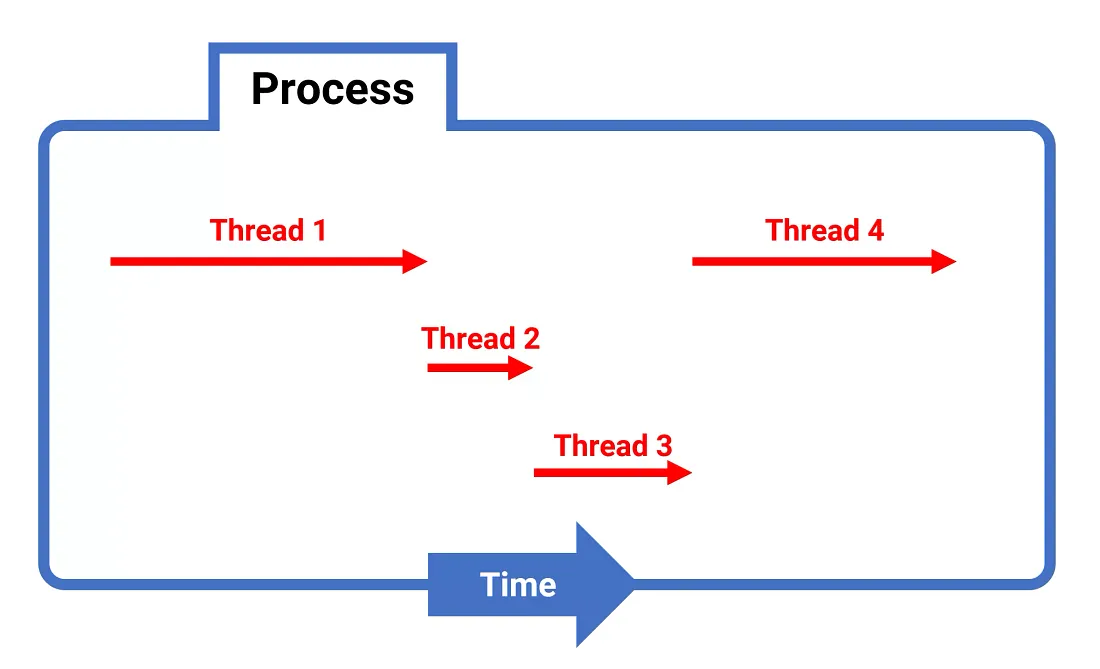

For software programming, thread used mainly to break down your program into smaller pieces executing concurrently and competing for resource allocation.

So each thread will present certain execution context, playing the role of an execution container in the assigned part of your program.

This thread based design enables sharing hardware resources between different parts of your code, while keeping control and track over which thread are allocating the hardware resources, preventing other threads from taking over the same resource already in use.

From user perspective, this can be seen as a way to parallelism the execution flow of your overall program, although in reality we may have a single CPU executing the running threads, however the fact that context switching done between different thread is made so fast and inefficient, it will create this illusion of parallel execution.

Your overall program will not be blocked anymore as each thread can run separately without blocking each one the other, of course if we made careful design on thread loads and dependency tree.

Due to this importance, thread creation and management if dispatched on certain processors and architecture to be Hardware based instead of being fully software based management.

In this article we will try to cover the major difference between software and hardware thread, as well as getting an idea about drawbacks of each of those two types of threads.

What is a Thread?

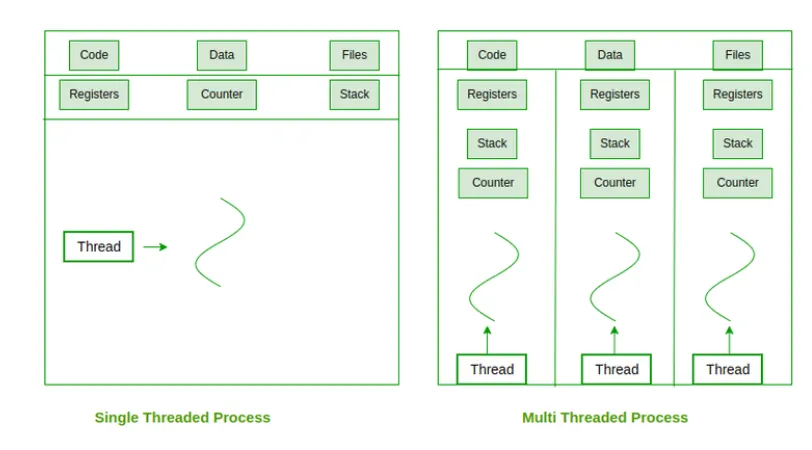

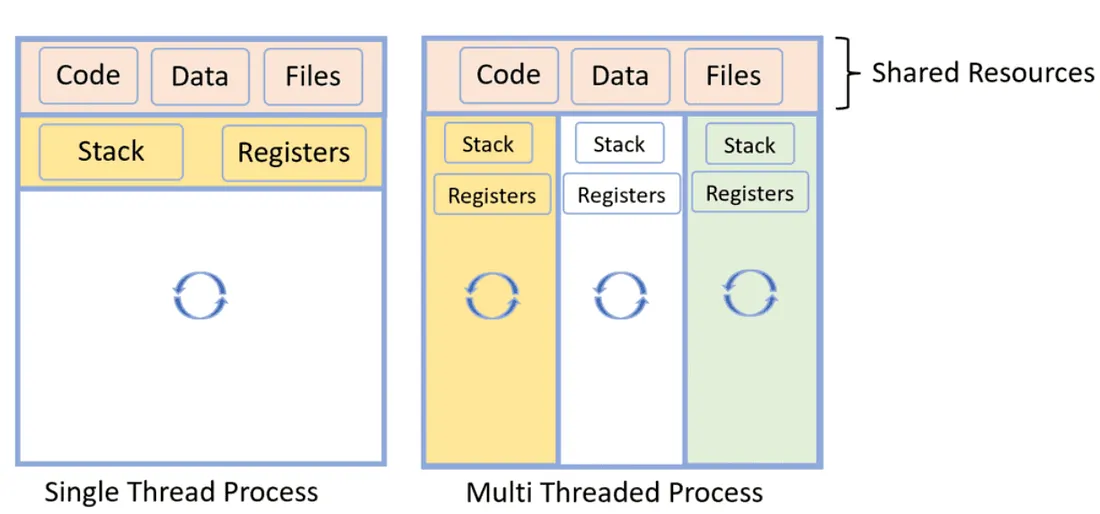

Thread consist of a piece of code using CPU resources in terms of internal registers and stack memory for predefined amount of time, we can have as many threads as the Operating System allows. However, more threads we have more managing those threads and to be more precise the context switching between those threads will be challenging for the operating system.

When it comes to threads, there are 3 terminologies that are quite critical for proper functionality of our thread based design:

- Context Switching

- Resources Sharing

- Isolation

Context Switching

Context switching refers to the procedure used to switch from one thread to another, it involves backing up current CPU state, current thread thread stack memory content, invoking scheduler to plan the thread to jump to, restoring the CPU state of the thread to jump into and finally jumping to the new thread.

Context Switching will define the time latency requires to switch back and forth between different running thread, and this has a big impact on overall performance of our running program.

Resources Sharing

Thread resources sharing is more about hardware resources that may need to be shared between multiple threads, those resources to be shared if not managed properly between those threads may lead to things like race condition or thread starvation as well as thread priority inversion.

Therefore, and to avoid those deadly side effects for our program threads, there are some couple of techniques that can be deployed for better resource sharing, like: Mutex, semaphores, signals, locks …

Isolation

Isolation refers to the concept of isolating each thread context from each one the other.

Thread context consists here of:

- CPU internal registers

- Stack memory

- Shared hardware resources

For cpu internal registers, the context switching will help in creating isolation between each thread cpu registers content via backup-restore mechanism on thread exit/entry routine, so during context switching the CPU internal registers content will be saved on some thread based memory structure and during restore procedure, what has been saved in terms of CPU registers content will be restored for that specific thread.

Each thread will have its own stack memory, so that local function variables, buffers as well as thread program related stack content will not overlap with other threads stack. During context switching the CPU stack pointer will be updated to point to the active thread stack area.

Shared hardware resources will be managed as stated before via objects like Mutex, Semaphores, Lock …

What is Software Thread?

Software thread is a normal thread that will execute some chunk of your main program and use some system resources to create its own context and execute properly.

You may wonder at this point what makes software thread different from any other thread types, and why we are even having different thread types?

Well, to help answering this valid question we need to re-invoke what we have learned in this article about thread context CPU Registers Interface.

Software threads will share same CPU registers interface between them, therefore the isolation between software threads is fully managed by the Scheduler performing the thread context switching, this is why context switching latency of software threads takes a considerable amount of time.

What is Hardware Thread?

Same as software thread, Hardware thread is not much more than a normal thread that requires its own context to execute isolated from other running threads context.

However, this time, and contrary to software thread, hardware threads don’t share CPU internal registers interface, each hardware thread will have its own CPU internal registers and no need to backup/restore those CPU registers on context switching.

The context switching latency will be reduced as no need to backup/restore CPU internal registers any more.

Having multiple CPU internal registers banks will create the illusion to the Operating System of having many Processors which helps boosting the amount of tasks to be scheduled and loaded into the processor.

Hardware thread which is known as well by Hyperthreading, is introduced in 2002 by Intel in their Xeon Server Processors and Pentium 4 Desktop Processors.

Since then, they have been used in many other intel processors like Atom, Itanium processors, core ‘i’ series, among many others.

The Hyperthreading technology although it was Intel specific but has been used and deployed in many other Processors architectures like Power PC processors from IBM, AMD based processors and even ARM has tried this technology in their Cortex-A65 processor.

Hyperthreading as stated before, will create the illusion of having multiple available processors to our Operating System, this is known as well by Virtual CPU.

Which drawbacks of having Hyperthreading?

Although Hyperthreading will allow instantiating multiple CPU registers interface from the same CPU, but we still have in reality a single CPU operating in the background, so having single CPU registers interface or having 100 registers interface , will fall back to the same single CPU with its own limited internal resources running all scheduled tasks.

CPU is not only about internal set of registers, what is more critical about CPU is the hardware resources handling the execution flow well as arithmetic based operations, there we cannot increase those internal resources unless we have deployed another second CPU in our system, where will start talking more about multi-processors programming not Hyperthreading anymore.

All scheduled threads for each CPU registers interface will be handled by same CPU execution pipeline, same Multiple unit, same Arithmetic logic unit …, so increasing the amount of CPU registers interface may lead to performance decrease as we are overloading our single CPU by instructions to be executed which end up by having big queue of instructions waiting to be executed and causing CPU pipeline stall.

So it is more about creating a proper balance between how much load our CPU can take on term of its internal hardware resources (how many ALU its has, how many multiply units it has, how deep its pipeline, the size of the Out-Of-Order (OOO) buffer …) and how many registers interface we should instantiate to keep the CPU busy.

The whole idea behind Hyperthreading is to keep CPU internal resources as busy as possible, so while executing an instruction that will use ALU unit the multiply unit will be free and not used, also some other blocks if available like FPU (Floating point Unit), DSP for Digital Signal processing will not be in use. Therefore, we will try to load as many instructions as we can to our CPU which will be queued first in what is called Out-Of-Order execution buffer (OOO buffer) before it gets dispatched to CPU pipeline for execution, and here, for dispatching CPU will determine if there is some dependency between instructions results and input operands and if they will use same internal CPU unit or not, and based on that will try to utilize in efficient way those resources for instruction execution.

As we can see here, Hyperthreading can be beneficial if the CPU itself has modern architecture aiming to achieve parallelism execution by:

- Enabling superscalar instruction execution and buffering quite significant amount of instructions.

- Having advanced algorithm to determine instructions dependencies, which resources to be allocated to which instruction.

- As well as having as many internal resources as it can support (instead of single ALU we can have multiple ones, instead of single Multiply unit we can have multiple …).

Conclusion

In real life, Software and Hardware threads (Hyperthreading) can be used in same time, it is only about use cases and when to deploy this or that.

Each concept has its own advantage and drawbacks, but personally I believe the drawback is coming from wrong use case where software or hyperthreaded gets deployed in.

Make sure always before jumping into coding, to understand your program requirements and expectation, then check what you have as inputs in terms of hardware resources (how many CPU you have, does your CPU support or not Hyperthreaded, how many if supported hyperthreaded the CPU can handle …), as this will help you to structure your program for efficient utilization of available resources.