Rust Programming for Embedded: Balancing Memory Safety and Real-Time Constraints

Rust for Embedded: Balancing Memory Safety and Real-Time Constraints

Introduction

Embedded systems exist in a unique world: they have to be safe since even a single bug could crash something as critical as a medical device or a car’s ECU and they must operate in real time, where tasks are measured in microseconds, not “whenever the scheduler decides.” Rust steps in with a compelling promise: memory safety without the overhead of a garbage collector.

On paper, that sounds ideal. In practice, though, things get complicated once you add interrupts, critical sections, and concurrency into the mix.

In this article, we’ll look at where Rust excels and where it can introduce extra challenges when dealing with real-time requirements. We’ll walk through examples involving interrupts, data races, and safe abstractions like mutexes and atomics, and we’ll explore how to strike a balance between Rust’s strong safety guarantees and the hard demands of real-time performance.

1 .Why Memory Safety is Hard in Embedded ?

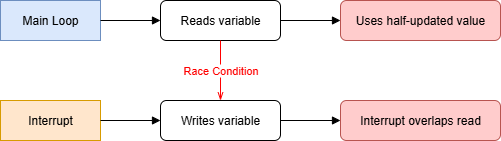

In embedded C, it’s common to rely on static or volatile globals to share state between the main loop and an interrupt handler. While this approach is fast and simple, it opens the door to subtle and catastrophic bugs. If an interrupt fires in the middle of a read-modify-write sequence, the main loop may be working with half-updated data, leading to data races and corrupted state. Even worse, nothing in C prevents misuse of pointers or out-of-bounds memory, so these problems often go undetected until they crash in production. Rust’s borrow checker takes a stricter stance: it simply forbids such patterns unless you explicitly guard access with safe abstractions.

2.The Interrupt Problem

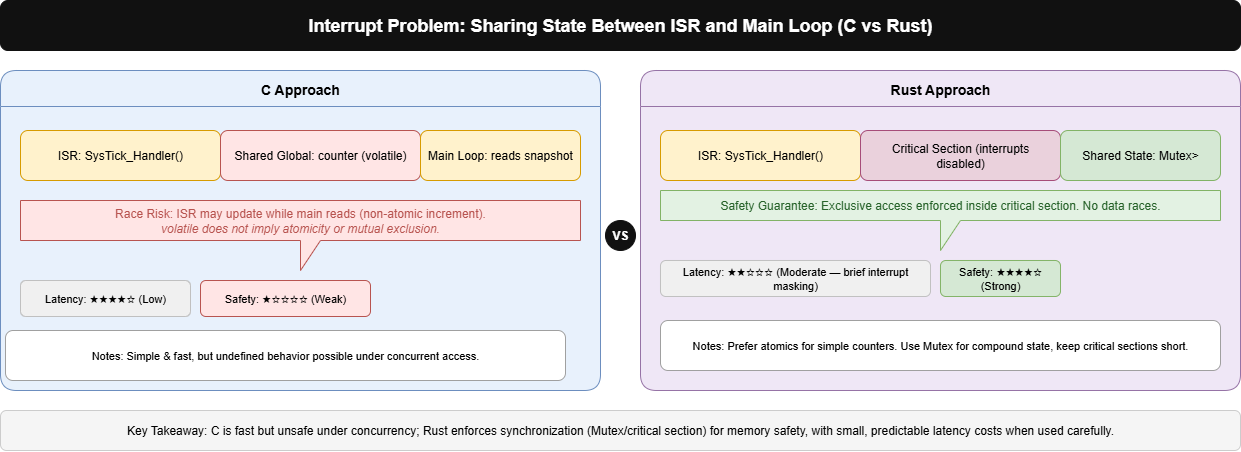

In embedded C, you can share a global variable between the main loop and an interrupt handler by marking it volatile, but this offers no protection concurrent access can still cause undefined behavior. Rust disallows that pattern: a static mut is unsafe because the compiler can’t ensure exclusive access. Instead, Rust requires safe wrappers like Mutex or Atomic, which enforce correctness but add some runtime cost.

For example, here’s the difference:

C (fast, unsafe):

static volatile int counter = 0;

void SysTick_Handler(void)

{

counter++; // risk of race condition

}Rust (safe, but with overhead):

use cortex_m::interrupt::{free, Mutex};

use core::cell::RefCell;

static COUNTER: Mutex<RefCell<u32>> = Mutex::new(RefCell::new(0));

fn systick_handler()

{

free(|cs|

{

*COUNTER.borrow(cs).borrow_mut() += 1;

});

}

In Rust, the free function temporarily disables interrupts while you borrow the data. This ensures atomic access but introduces latency which may be fine for short critical sections, but can become problematic if you overuse it in real-time applications.

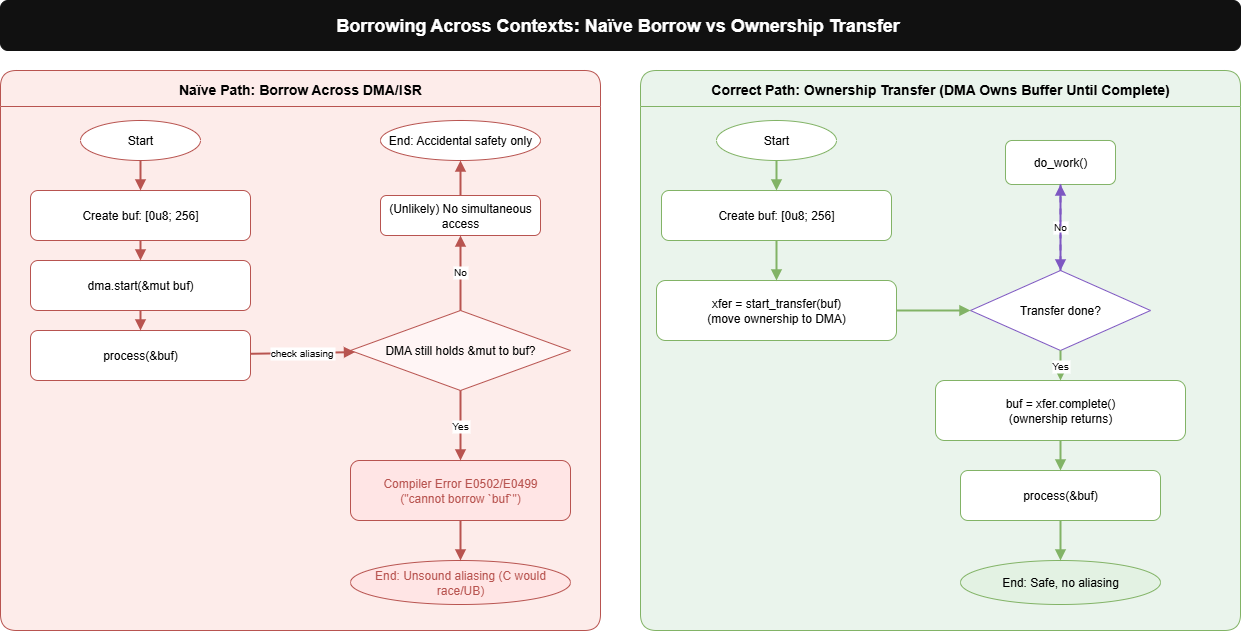

3. Borrowing Across Contexts

Hardware doesn’t wait for your function scopes. A DMA engine or an interrupt handler may keep using a buffer long after the call that passed it returns. In C, we routinely hand a pointer to an ISR/DMA and also keep using that memory in the main loop. Rust pushes back: if an interrupt or peripheral might still hold a mutable reference, the compiler prevents you from also reading/writing that buffer. The error feels annoying at first (“why won’t this compile?”), but it’s flagging a real race that would be undefined behavior in C.

A common failure mode looks like this:

// Aliasing mutable borrow

let mut buf = [0u8; 256];

dma.start(&mut buf); // DMA holds &mut [u8] across time

process(&buf); // borrow conflict : DMA still owns &mut

Rust wants you to model the time dimension explicitly. Typical fixes:

- __Ownership transfer (typestate/RAII):__ move the buffer into a Transfer object that owns it while hardware runs, and gives it back when done. No aliasing, no critical sections.

- __Ping–pong (double buffering):__ hardware fills A while the CPU processes B, then swap. Ownership moves, not borrows.

- __Lock-free queues/pools:__ e.g., bbqueue/ring buffers where ISR produces and main consumes without sharing a &mut at the same time.

To make this concrete, here’s a minimal ownership-transfer pattern where the DMA takes the buffer and only gives it back once the transfer completes.

// Ownership transfer: DMA owns the buffer until completion

struct Transfer<B>

{

buf: Option<B>,

// ... device registers, state, etc.

}

impl<B> Transfer<B>

{

fn is_done(&self) -> bool { /* poll a flag/ISR-updated bit */ }

fn complete(mut self) -> B {

// ensure hardware stopped; clear IRQ; return the buffer

self.buf.take().unwrap()

}

}

let buf = [0u8; 256];

let mut xfer = dma.start_transfer(buf); // moves ownership into DMA (no shared &mut)

// do other work; no access to buffer aliases here

while !xfer.is_done()

{

do_something_useful();

}

let buf = xfer.complete(); // ownership returns here

process(&buf); // now it’s safe again

The same idea scales into a ping–pong scheme: the DMA fills one buffer while the CPU processes the other, then they swap ownership.

let a = [0u8; 256];

let b = [0u8; 256];

let mut pp = dma.start_ping_pong(a, b);

loop {

if let Some(full) = pp.take_full() { // take ownership of a full buffer

process(&full);

pp.give_empty(full); // hand it back as the new empty

}

}

Conclusion

Real-time embedded work is about balancing deadlines with safety. Rust makes that tension explicit: ownership rules prevent data races and push you toward atomics, buffer hand-offs, and minimal critical sections. It’s not real-time for free, but by modeling time and sharing in the type system, you catch bugs at compile time instead of on the oscilloscope.